are you talking with a human?

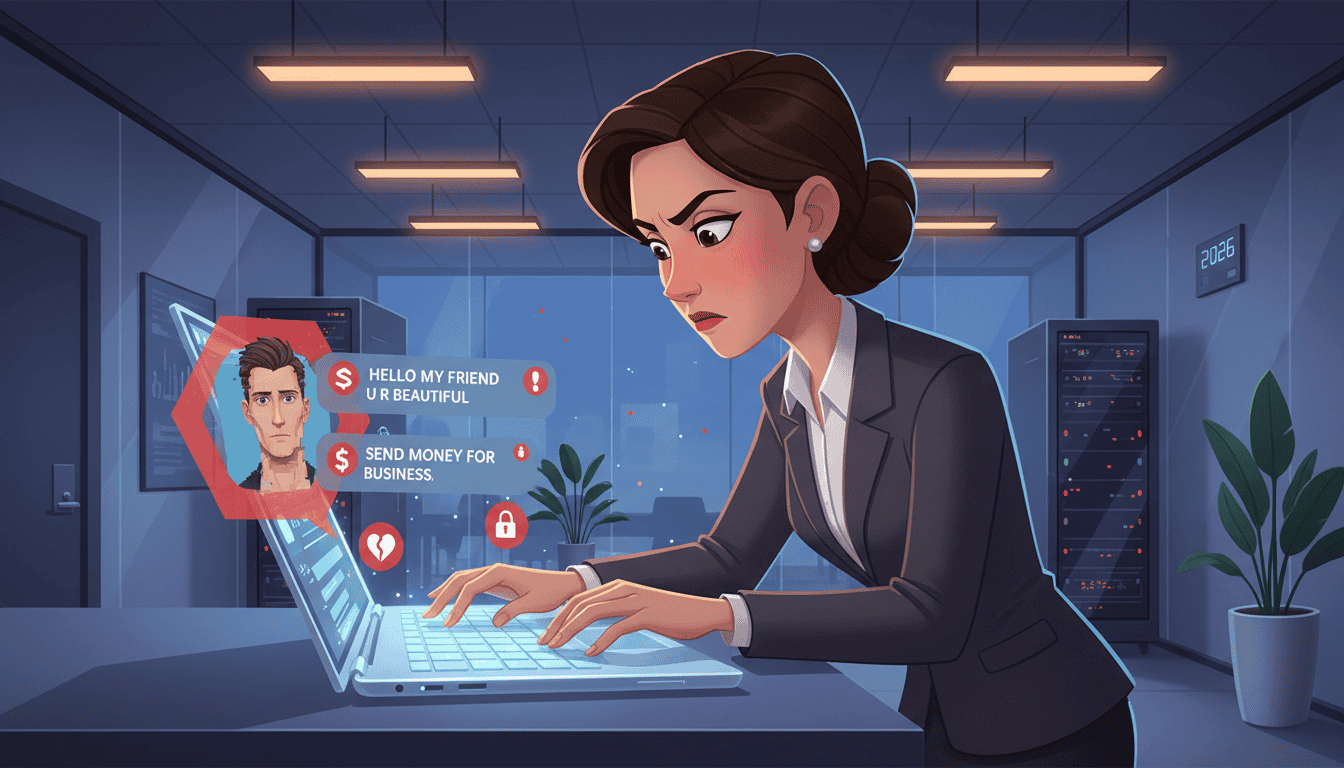

Imagine losing more than a billion dollars to people who don’t even exist. According to the FBI’s Internet Crime Complaint Center, that’s approximately the scale of anual losses reported in recent years from online romance and investment fraud — and those numbers likely undercount the true total because many victims never report what happened. In my experience reviewing these apps, the smartest scams read like love stories before you realize they’re financial schemes.

What makes these operations different from traditional phishing is the timeline: they don’t rely on panic or a one-off trick. Scammers cultivate trust over weeks, months, or even years, using high-quality images, believable backstories, and sometimes AI-generated videos to create the illusion of a real person. People from all walks of life — including military personnel, healthcare workers, and people in moments of life transition — have become victims of these long-cons.

The convergence of generative AI and deliberate psychological manipulation has created a new landscape of deception. Reported losses capture only part of the picture because emotional harm, embarrassment, and career concerns often keep victims silent. I’ve reviewed hundreds of cases — you’re not alone, and there are practical steps you can take (see “Protecting Yourself and Your Relationships from Scammers” below).

Key Takeaways

- AI-era digital deception causes massive annual losses and increasingly sophisticated fraud across platforms

- These operations build emotional connections over extended periods rather than using quick scare tactics

- Military personnel, healthcare workers, and people in transition are commonly targeted

- Reported financial damages significantly underestimate the true scope due to underreporting

- Advanced technology combined with psychological manipulation makes detection more challenging

- Understanding how these schemes work is the most important protection

- Learn to distinguish genuine romance from manufactured relationships and always verify before sending money

If you’re tightening your profile security, check the Tinder Reset guide for practical steps.If you suspect a scam, see the steps under “Protecting Yourself and Your Relationships from Scammers” below and bookmark this page to share with friends and family.

Understanding AI Romance Scams 2026

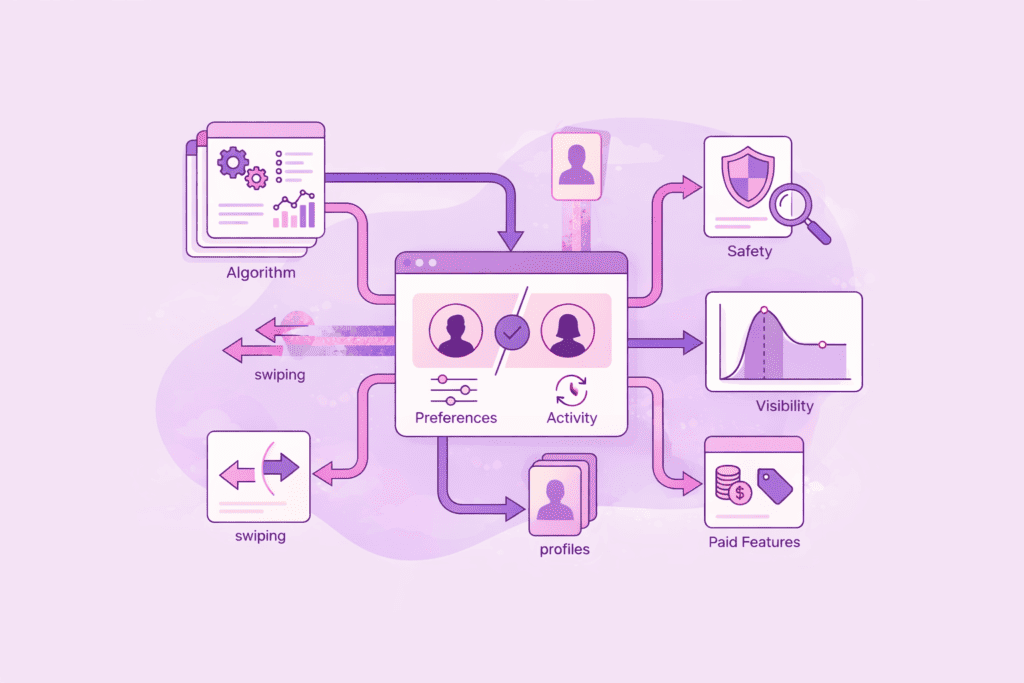

Today’s digital con artists combine psychological manipulation with cutting-edge technology to build trust in highly systematic ways. These evolving scams represent a fundamental shift from the short, opportunistic phishing schemes of the past into prolonged, personalized operations that treat relationships like a scalable attack surface.

What Defines an AI Romance Scam?

Modern deception schemes create hyper-realistic personas that communicate across multiple platforms and channels. They cultivate emotional bonds over weeks or months using tailored messages that feel personal — not generic phishing blasts.

Scammers now use automation and generative models to manage dozens of conversations simultaneously, each one adapted to the target’s profile and behavior. In my experience, the most convincing profiles blend AI-generated media with curated social histories and believable small details to reduce suspicion.

The Role of Generative AI in Shaping Scam Tactics

Generative technology has erased many of the old warning signs (bad grammar, clumsy photos). Criminal operations can now assemble complete digital identities with fabricated backstories, plausible photos, forged documents, and short video clips that mimic natural speech.

These tools scale romance scams across dating platforms and social media networks, turning a handful of operators into large actor networks that target thousands of people.

Understanding how these systems create believable narratives, where messages are fed by research and behavioral signals, is essential. That knowledge gives you the context to spot manipulation and use the right verification tools when a conversation feels off.

The Psychological Long Con: How Emotional Manipulation Works

The most sophisticated deception schemes don’t attack your finances first—they target your heart and your decision-making. By building artificial intimacy that feels deeply personal, scammers turn emotional investment into a weapon that makes later financial requests seem reasonable.

Techniques Used to Build Trust Over Time

Deception artists commonly follow a predictable four-stage arc. Stage one is rapid intimacy: warm, highly attentive messages that create an instant bond and lower your guard. Example scripted message: “I feel like we finally understand each other—I’ve never clicked with someone like this.”

Stage two is manufactured vulnerability: the scammer shares a dramatic personal story or hardship designed to trigger empathy and deepen trust. Example scripted message: “I wish I could tell you everything but I can’t right now—I’m dealing with something with my family and I need someone I can trust.”

After trust is established, isolation tactics appear—requests to move the conversation to a private app, explanations about secrecy or restricted contact, and discouragement of outside verification. Stage four is the ask: requests for money or help framed as emergencies or investment opportunities so giving feels like compassion, not a transaction.

In my experience reviewing these apps, I’ve seen the exact four-stage arc play out in dozens of conversations — the patterns are consistent enough that spotting them early is one of the best defenses.

The Impact of Manufactured Vulnerability

When someone shares a convincing-sounding hardship, your protective instincts kick in and you may accept claims at face value. That manufactured trust becomes the scammer’s strongest tool.

Cognitive load and timing matter: scammers often choose moments when victims are tired, stressed, or emotionally vulnerable so analytical defenses are lower. They also leverage high-frequency messaging to dominate your attention and shape the conversation.

If you notice rapid progression, scripted-sounding messages, or repeated avoidance of live calls and verification, pause and consult a trusted friend before continuing the conversation. A simple “challenge-response” during a spontaneous video call (ask them to say a random phrase or hold up today’s newspaper) often exposes scripted accounts or deepfake video attempts.

Spotting Fake Profiles and Deepfake Media

The key to protecting yourself online is developing a sharp eye for the small details fake accounts often get wrong. That means checking profile information across platforms and closely analyzing any images or videos someone shares before you trust them.

Recognizing Inconsistent Profile Details

Start by scrutinizing basic profile facts: job titles, locations, and life events should align across accounts. Look for impossible timelines, contradictory details, or profiles with very few friends or connections.

Perform a reverse image search on profile photos (Google Lens, TinEye). If the same photo appears on multiple accounts, stock sites, or belongs to a different person, treat it as a major red flag—scammers commonly reuse stolen images across accounts and platforms.

Also cross-check usernames, email handles, and any links they share; small mismatches often reveal coordinated fraud or cloned identities. If something feels off, slow down the conversation and verify before you share personal information.

Identifying Deepfakes and AI-Generated Images

What I find most dangerous about the 2026 shift is how quickly videos and images can be faked. Pre-recorded clips and AI-generated media can look convincing at a glance, so you need a checklist for artifacts to watch for.

Common AI-generation artifacts and signs to inspect: weird fingers or hands, inconsistent backgrounds or odd shadows, warped teeth or face boundaries, unnatural blinking or facial micro-movements, and irregular lighting across frames. These cues are subtle but detectable when you look frame-by-frame.

Beyond still-image checks, perform a reverse video search: extract a clear frame from the clip (most phone screenshots work) and run that frame through reverse-image tools. There are emerging services and forensic tools that search by video frame.

Be wary of accounts that only send pre-recorded videos or refuse spontaneous interaction. Excuses like “bad connection” or “can’t do video for security reasons” are common tactics to avoid live verification.

During a live call, use simple challenge-response prompts that are hard to pre-record: ask the person to hold up today’s newspaper, say a random word you choose, or wave a specific hand. If they refuse or the video glitches oddly, consider that a strong sign the account may be fake.

Practical notes: keep an eye on the account’s associated app/email handles, and watch for phishing attempts delivered via links or attachment—scammers often move victims to off-app email or messaging to isolate them. If you manage multiple dating profiles, apply the same verification tools across social media and messaging apps to protect your identity.

Real-World Cases and Trends in AI Scams

Understanding documented incidents helps you recognize repeatable patterns used by criminal enterprises and spot the signals before they become personal losses. These case types show how romance and investment narratives combine at scale in 2026.

CryptoRom Networks and Their Financial Impact

The CryptoRom model is a hybrid fraud operation that begins with friendly messages on dating platforms and evolves into pressure to join exclusive cryptocurrency investment opportunities. Scammers build trust through conversation and then introduce fake trading dashboards or opaque platforms that show rapid gains — victims discover the truth when withdrawals fail.

In my review of several CryptoRom takedowns, what stood out was how quickly “investment” language builds legitimacy: once someone believes a relationship and a purported market opportunity are linked, pressure to send money (often as crypto) escalates rapidly.

“Victims believe they’re building both relationships and financial futures simultaneously.”

Military Impersonation and Social Engineering Examples

Military impersonation scams exploit public trust in service members. These operations use stolen photos, polished scripts, and social engineering to explain away requests for secrecy or restricted communication — for example, claiming they can’t do video calls due to “security” and asking victims to communicate off-app.

Agencies report thousands of related complaints each year across various enforcement bodies; exact figures and case files vary by year and jurisdiction, so link to cited court or agency documents when possible for verification.

These real-world examples show common warning signs: urgent requests for money, exclusive “market” access that requires immediate investment, and rapid escalation from conversation to financial ask. Be especially cautious when someone pushes cryptocurrency or off-platform payments — crypto transactions are often irreversible and favored by scammers.

For readers tracking scams, add dates and primary-source links (court filings, agency reports, or investigative journalism) to each mini-case note — that makes it easier to confirm the numbers and follow ongoing developments in 2026.

Protecting Yourself and Your Relationships from Scammers

Your first line of defense starts with straightforward identity verification everyone can do. Before you develop emotional attachments, run a few quick checks to confirm you’re talking to a real person — it often takes less than a minute.

Practical Strategies to Verify Identity

Insist on a spontaneous live video call and use challenge-response prompts that are hard to pre-record: ask them to say a random phrase you choose, hold up today’s newspaper, or wave a specific hand. In my experience reviewing these apps, the fastest wins come from a 30-second live check — a quick spontaneous call cuts off scripted scams.

Beyond live calls, expand verification to media: perform a reverse image search on every profile photo (Google Lens, TinEye) and a reverse video search workflow for clips — extract a clear frame (screenshot or video frame grab) and run that image through reverse-image tools. Watch for AI-generation artifacts like weird fingers, inconsistent backgrounds, warped teeth, irregular lighting, or unnatural blinking; these signs often reveal deepfake media.

Ask direct, open questions when stories conflict. The FTC recommends asking non-confrontational, clarifying questions (for example: “Can you tell me how that worked again?”) rather than accusing language. Legitimate connections welcome verification; scammers often become defensive or dodge.

Tips for Securing Your Personal and Financial Information

Never share financial account details, social security numbers, or workplace information with someone you’ve only met online. Maintain separate email addresses and accounts for dating that aren’t tied to your banking or primary communications, and enable two-factor authentication on all important accounts. Use a password manager to generate unique passwords and reduce account compromise risk.

Watch carefully for phishing through suspicious links, attachments, or off-platform websites. Scammers often move the conversation to email or another messaging app to isolate victims; treat unexpected links or requests to sign up on unfamiliar websites as high risk. If you receive an email that appears to come from a dating app, check the sender address and never download attachments unless you can independently verify the source.

Document interactions by saving messages, screenshots, and profile links. The FBI and FTC recommend preserving transaction details and communications — this information helps pattern recognition and supports reporting. If you start to feel pressured for money, stop all transfers immediately and contact your bank for help.

Never send money through wire transfer, cryptocurrency, or gift cards to anyone you haven’t met in person. Those payment methods are often irreversible and favored by scammers because they are difficult to trace or recover.

Quick Verification Checklist (copy/paste)

- Ask for a spontaneous video call with a challenge-response prompt (random word/newspaper).

- Run reverse image and reverse video searches on photos/videos.

- Check email/app handles and look for inconsistent profile details across social media.

- Enable 2FA and use unique passwords; keep dating accounts separate from bank/primary email.

- If asked for money, pause, document everything, and contact your bank and platform support immediately.

If you need immediate help: contact your bank to freeze payments, save all evidence, and report the profile to the app’s support and to IC3/FTC.

Law Enforcement and Technology Solutions Against Scams

Behind the scenes, specialized teams in law enforcement and the private sector work to identify and dismantle sophisticated fraud operations. These groups combine human investigators with automated tracking systems to map criminal networks and prioritize takedowns across multiple platforms.

Modern detection tools analyze persona footprints across social media and dark-web forums, flagging reused photos, suspicious email patterns, and linguistic markers that reveal coordinated schemes. Intelligence teams correlate data from multiple accounts to identify networks rather than isolated profiles.

How Agencies Monitor and Combat Fraud

The FBI’s Internet Crime Complaint Center collects victim reports and coordinates national and international investigations; similarly, other agencies and platform safety teams share indicators to interrupt campaigns. These coordinated efforts turn individual reports into actionable intelligence and help track actors across jurisdictions.

Security firms and platform trust-and-safety teams deploy scalable systems that flag impersonation accounts and automated networks. These tools identify look-alike profiles mimicking service members or officials and surface patterns that human reviewers then investigate.

The Role of Technology in Scam Detection

Synthetic-media forensics tools analyze images and videos for signs of artificial generation — from metadata anomalies to pixel-level artifacts. When combined with account- and network-level analysis, these tools speed identification of staged photos and manipulated documents used in deception campaigns.

Once fraudulent accounts are identified, takedown processes and cross-platform coordination remove them and close the infrastructure used to operate at scale. Community-level threat intelligence programs share early warnings with high-risk groups so members can protect themselves before emotional connections form.

What I find most dangerous about the 2026 shift is that generative tools moved faster than many user protections — agencies and platforms are catching up, but you still need personal verification practices.

Recommended tools and notes (examples only): open-source and commercial forensics tools can surface deepfake markers; network-intel platforms correlate reused media and account signals; beware of false positives and respect privacy when running checks. When reporting, include concise, structured data (profile links, timestamps, screenshots) to help support teams triage faster — sample report language is in the FAQ’s reporting checklist.

Reporting suspicious activity creates valuable data for prevention efforts. File complaints at IC3.gov and ReportFraud.ftc.gov and report the user directly on the dating app or website so platform support teams can act.

Emotional and Financial Impact on Victims

The true cost of digital deception reaches far beyond dollars — it leaves long-lasting emotional scars. Many people feel profound shame and self-blame after discovering they’ve been manipulated, which often delays reporting and recovery. That silence is one reason official totals understate the true scale of romance fraud and related schemes.

Recent agency data shows billions lost annually to online fraud, but countless cases go unreported each year. Military personnel and other professionals frequently hesitate to come forward because of career or reputation concerns, which further obscures the full picture.

Recognizing Early Warning Signs of Manipulation

Artificial relationships often follow repeatable patterns. Genuine connections typically develop gradually; deceptive ones accelerate intimacy and then introduce financial or logistical pressure. Watch for these red flags and treat them seriously.

Key red flags include:

- Rapid progression to intense emotional intimacy

- Constant flattery that feels excessive or scripted

- Reluctance to have spontaneous video calls or to verify identity

- Gradual introduction of financial problems or urgent asks for money

- Attempts to isolate you from friends or discourage outside opinions

If you suspect you’re being targeted — do this now

- Stop all money transfers immediately — do not send wire transfers, cryptocurrency, or gift cards.

- Preserve evidence: save messages, profile links, screenshots, and transaction records.

- Contact your bank or payment provider (phone) to report the transaction and ask about reversing or freezing payments.

- Report the account to the app or website and file a complaint with IC3/FTC — prompt reporting helps investigators and protects others.

- Seek support: talk with trusted friends or a counselor; victim-support organizations can assist with emotional recovery and practical steps.

In my experience, victims benefit enormously from outside perspective — a small support group or counselor who understands online fraud can help restore trust and speed recovery. If you need help now, contact your bank and the platform’s support team immediately, and consult listed victim-support resources in the FAQ for phone numbers and reporting links.

Conclusion

The tools for protecting yourself from digital deception are now within reach when you combine personal vigilance with community resources and timely reporting. You’ve seen how modern fraud operations target emotions first, not technical vulnerabilities, and why a layered approach matters in 2026.

Your multi-layered defense strategy should include quick identity checks (spontaneous video calls with challenge-response), reverse image and reverse video searches on media, secure account hygiene (unique passwords and 2FA), and an absolute rule: never send money to someone you haven’t met in person. Avoid untraceable methods like wire transfers, gift cards, or cryptocurrency when dealing with unknown people.

Remember: romance scams affect people across all demographics who are simply seeking connection. Stay current by following security news and asking the right questions when something about a conversation or investment offer feels off.

What I find most dangerous about the 2026 shift is how believable these personas have become — but practical checks and community reporting still stop most scams before they escalate. If you think you’re a victim, get immediate help: contact your bank, preserve evidence, and report the account to the app and to IC3/FTC. Your actions protect both you and other people from repeated fraud.

FAQ

How can I tell if a profile is fake?

Look for inconsistent details across accounts: mismatched job/location, very few friends or connections, and timelines that don’t add up. Run a reverse image search (Google Lens, TinEye) on profile photos and also extract clear frames from any shared videos to run as image searches. Check email/app handles and linked social media — small mismatches often reveal cloned identities. If things look too perfect or identical images appear elsewhere, slow down the conversation and verify before sharing personal information.

What should I do if someone I met online asks for money?

This is a major red flag. Never send funds, cryptocurrency, wire transfers, or gift cards to someone you haven’t met in person. Stop all transfers immediately if you suspect fraud, preserve evidence (messages, profile links, transaction receipts), contact your bank/phone payment provider for help, and report the account to the app’s support team and to IC3/FTC.

Are video calls a safe way to verify someone’s identity?

Video calls are better than text but can be faked with deepfakes. Use challenge-response verification: ask the person to hold up today’s newspaper, say a specific random phrase you pick, or perform a spontaneous action (wave a particular hand). Be cautious of poor-quality video, repeated glitches, or refusal to comply — these are common evasion tactics. If a call seems suspicious, extract frames from the video and run reverse searches on those images.

How do I check if a video was AI-generated?

Quick steps: 1) Save the original video and take several high-quality frame screenshots; 2) Run those frames through reverse-image tools (Google Lens, TinEye) to check reuse; 3) Look for AI artifacts — weird fingers or hands, inconsistent backgrounds or lighting, warped teeth or facial edges, unnatural blinking or lip-sync issues; 4) Use dedicated synthetic-media forensics tools if available (some open-source and commercial options detect generation markers). If unsure, report the clip to the platform support team and include timestamps and frame screenshots to speed the forensic review.

What is a common emotional manipulation tactic used by scammers?

Scammers frequently create urgency or tragedy to short-circuit rational decision-making — for example, claiming a medical emergency or a time-sensitive business need. They build trust quickly, profess strong feelings, and then introduce a crisis or an “investment opportunity” to extract money. Recognize scripted flattery and sudden crises as red flags.

How are criminals using cryptocurrency in these schemes?

Cryptocurrency is attractive to scammers because transactions are often irreversible and harder to trace. Victims are pressured into sending crypto directly, buying into fake trading platforms, or transferring funds to private wallets for “urgent” needs. If anyone pushes crypto as a payment method, treat it as high risk and consult the ROI Analysis page before considering any financial action.

What steps can I take to secure my personal data?

Use strong, unique passwords and a password manager for all accounts, enable two-factor authentication, and keep dating accounts separate from primary email and banking accounts. Be cautious about what you post publicly and avoid sharing identifying details early in a conversation. Watch for phishing attempts via email or website links and verify sender addresses before interacting.

Where can I report a suspected romance fraud or get help?

Report the user on the dating app or website where you met them so platform support teams can act. If you need immediate help, contact your bank or phone/payment provider to try to halt transactions, preserve all evidence, and seek support from victim-assistance organizations.